My research interests are Human-Computer Interaction (HCI), with a current focus on education technology, and Human-Centred AI.

Current Projects

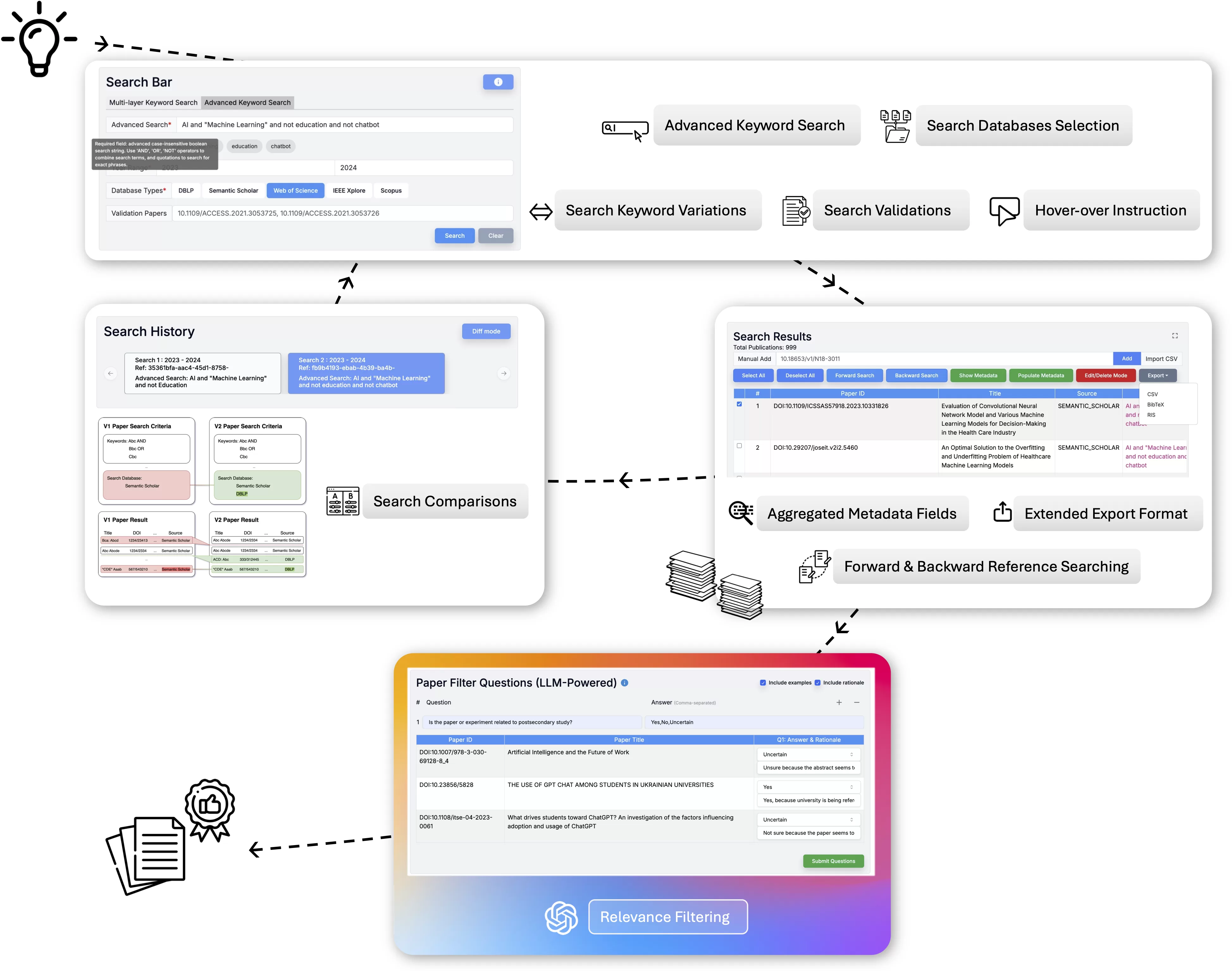

Design, Implementation, and Evaluation of a Novice Systematic Review Assistant

Advised by Prof. Tingting Zhu, Carolina Nobre, and Michael Liut

Papers

ARC: Automated Review Companion Leveraging User-Centered

Design for Systematic Literature Reviews

(In Submission)

Ye, R., Sibia, N., Zavaleta Bernuy, A., Zhu, T., Nobre, C., & Liut, M. (2024). OSF Preprint.

Project Description

We developed ARC, an open-source Automated Review Companion designed to streamline and enhance the process of conducting systematic literature reviews (SLRs), particularly in the computing field. SLRs are essential for synthesizing research, but they can be time-consuming and labor-intensive. ARC addresses these challenges by automating tasks such as literature searches, data extraction, and reference tracking, making the process more efficient while maintaining high standards of transparency and reproducibility.

Developed using a user-centered design approach, ARC was iteratively refined through feedback from 20 experienced computing researchers. Key features of ARC include support for multiple scholarly databases, automated forward and backward reference searching, and metadata extraction. The tool incorporates FAIR (Findable, Accessible, Interoperable, and Reusable) principles, ensuring that it promotes open, reproducible research practices. Overall, ARC offers a meaningful improvement in the usability and effectiveness of tools for systematic reviews, lowering the barrier to conducting thorough, rigorous research in computing and other fields.

Past Projects

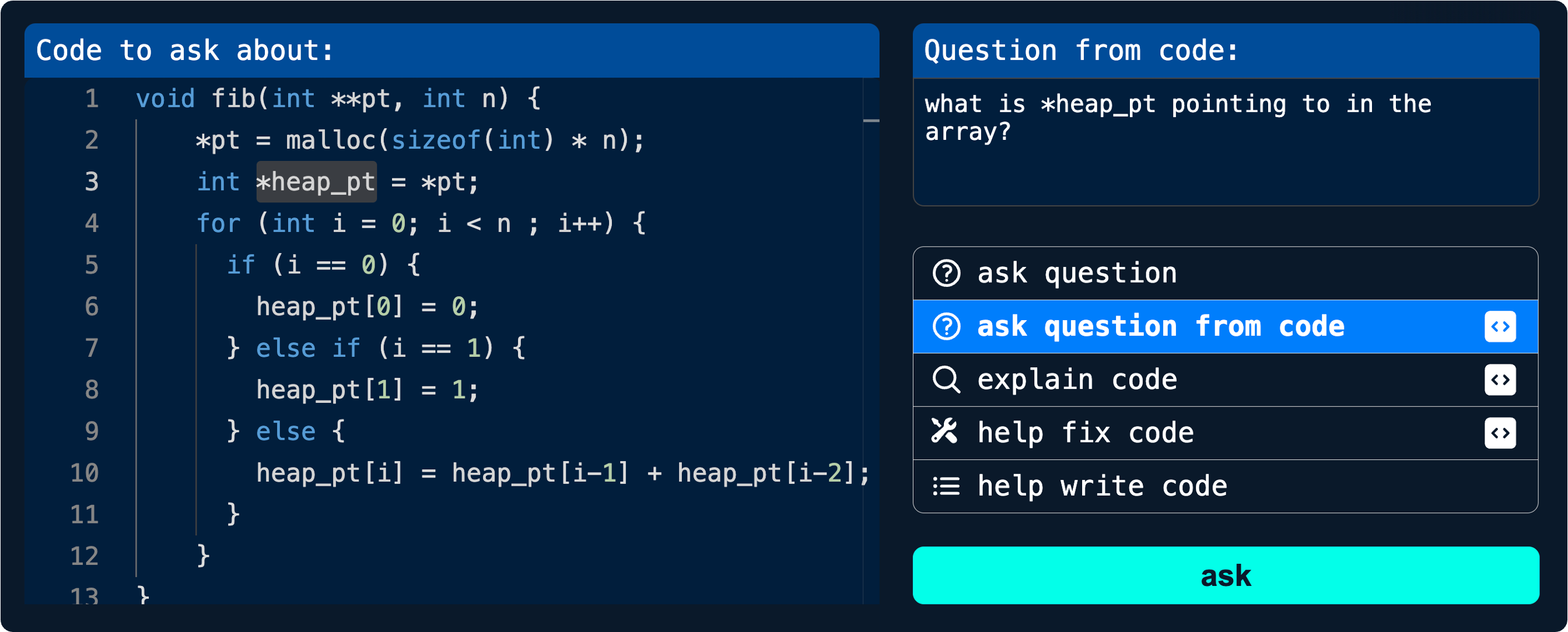

Design and Evaluation of New Programming Tools for Novices using AI Coding Assistants

Advised by Prof. Tovi Grossman, Michelle Craig

Mentored by Ph.D. student Majeed Kazemitabaar

Papers

CodeAid: Evaluating a Classroom Deployment of an LLM-based Programming Assistant that Balances Student and Educator Needs.

Kazemitabaar, M., Ye, R., Wang, X., Henley, A., Denny, P., Craig, M., & Grossman, T. (2024, May). In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems (CHI ’24)

Project Description

We introduced CodeAid — An LLM-based programming assistant. CodeAid was deployed in a large university classroom of 700 students and conducted a thematic analysis of its 8,000 usages, supplemented by weekly surveys and student interviews. Further feedback was obtained from eight programming educators. Results showed that most students used CodeAid for understanding concepts and debugging, though some students directly asked for code solutions. While educators valued its educational merits, they also highlighted concerns about occasional inaccuracies and the potential for students to rely on tools like ChatGPT.

Investigating the Impact of Online Homework Reminders Using Randomized A/B Comparisons

Advised by Prof. Joseph Jay Williams, Andrew Petersen, Michael Liut

Mentored by Ph.D. Candidate Angela Zavaleta-Bernuy

Papers

![SIGCSE 2024 figure: Comparison of two reminder emails. Two versions of the email are located on the left and right of the figure, with the middle section highlighting the similarities and differences between the two emails. Left Email (Control Group): Subject: [DB course code] Upcoming Deadlines Content: 'This week in DB the following assessments are due: [Thursday, Feb 17th, 12:00pm] Prep Week 5; [Friday, Feb 11th, 12:00pm] Lab 2.' Reminder to complete readings from Chapter 5 (5.1-5.6) and try some problems from Ex. 5.1-5.3. Reference to programming with 'AI Copilot on GitHub' with suggestions for code lines or functions inside the editor. Sign-off: 'All the best, [DB] teaching team.' Postscript praising the reader's progress, a reminder that the email is sent to all students, a contact email (redacted), and an unsubscribe link. Right Email (Treatment Group): Subject: [DB course code] Programming with AI and Upcoming Deadlines Content: Begins with 'Good Evening!' Introduces 'AI Copilot on GitHub' emphasizing its newness, its usefulness to experienced programmers, and those unfamiliar with syntax. Lists the same assessments due: [Thursday, Feb 17th, 12:00pm] Prep Week 5; [Friday, Feb 11th, 12:00pm] Lab 2. Reiterates the reminder about Chapter 5 readings (5.1-5.6) and exercises. Adds a note about visiting office hours and a motivational statement: 'One month down, only two more to go! You've got this! happy face' Sign-off: 'All the best, [Instructor's name].' Shares a quote: '“Before software should be reusable, it should be usable.” - Ralph Johnson.' Ends with the same postscript as the control email, praising progress, informing the email goes to all, a redacted contact email, and an unsubscribe link. Blue Boxes compare the two versions of email in the middle: More descriptive subject line: The treatment email's subject includes 'Programming with AI.' Extra greeting: The treatment email starts with 'Good Evening!' Same resources, with a more personal touch: The treatment email explains 'AI Copilot on GitHub' in a more personalized manner. Same course reminder: Both emails list the assessments due. Extra instructor reminder: The treatment email suggests visiting office hours. More personalized sign-off and extra quote: The treatment email includes an instructor's name in the sign-off and a quote by Ralph Johnson. Same disclaimer and unsubscribe link: Both emails provide an unsubscribe option.](https://harryye.com/wp-content/uploads/2024/03/sigcse-24-experiment-1024x578.png)

Student Interaction with Instructor Emails in Introductory and Upper-Year Computing Courses.

Zavaleta Bernuy, A., Ye, R., Sibia, N., Nalluri, R., Williams, J. J., Petersen, A., Smith, E., Simion, B., & Liut, M. (2024, March). In Proceedings of the 55th ACM Technical Symposium on Computer Science Education (SIGCSE ’24).

Do Students Read Instructor Emails? A Case Study of Intervention Email Open Rates

Zavaleta Bernuy, A., Ye, R., Tran, E., Mandal, A., Shaikh, H., Simion, B., Petersen, A., Liut, M., & Williams, J. J. (2023, November). In Proceedings of the 23rd Koli Calling International Conference on Computing Education Research (Koli Calling ’23).

🏆 Behavioral Consequences of Reminder Emails on Students’ Academic Performance: a Real-world Deployment

Ye, R., Chen, P., Mao, Y., Wang-Lin, A., Shaikh, H., Zavaleta Bernuy, A., & Williams, J. J. (2022, September). In Proceedings of the 23rd Annual Conference on Information Technology Education (SIGITE ’22).

Posters

Chen, P.*, Ye, R.*, & Mao, Y.* (2021 March). Behavioral consequences of reminder emails on students’ performances for homework: a real-world deployment. University of Toronto Undergraduate Poster Fair.

*contributed equally

Project Description

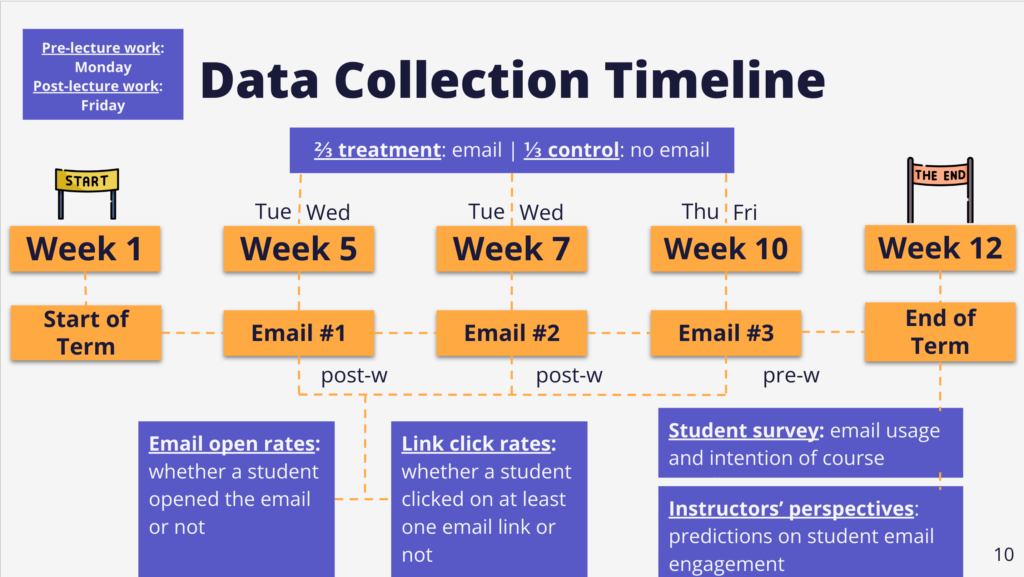

The Intelligent Adaptive Interventions (IAI) Lab’s OnTrack project aims to support and improve students’ learning behaviours through homework reminder emails.

Randomized A/B experiments provide one source of evidence for designing instructional interventions and understanding how students learn. Generally, conducting experiments in the field raises the question of how the data can be used to rapidly benefit both current and future students. Adaptive experiments provide the solution as these can be deployed to increase the chances that current students also obtain better learning outcomes. Our goal is to provide a standalone system that can send students reminders, and prompts to reflect. We know instructors have their own style and approach to messaging, and so this OnTrack messaging service is meant to be a separate channel that is clearly different from the high-priority announcements from instructors.

OnTrack utilizes randomized A/B comparisons to evaluate different reminders or alternative ideas about how best to encourage students to start work earlier and, crucially, to measure the impact of these interventions on student behaviour. The effect of homework email reminders on students is further evaluated by conducting an adaptive experiment using multi-armed bandit algorithms which identifies and allocates more students to the most optimal treatment. The rationales are:

- Students can receive extra support with everything being online, with the IAI lab putting energy into crafting different messages, based on student feedback.

- OnTrack emails won’t ‘interfere’ with instructor communication or make students start to ignore them (concerns raised by instructors, which we share).

- Instructors still have the option to get data from what we test out and give suggestions or requests for what emails they want to see us test out.

After several deployments at UTSG and UTM, OnTrack has gathered valuable understandings of how students perceive different aspects of reminder emails, i.e. subject lines, calendar events, links to online homework, motivational messages, etc. Currently, OnTrack is pioneering and experimenting with additional ideas, such as incorporating cognitive psychology aspects in the design of the emails.